Original author: Evan ⨀

Original translation: TechFlow

The intersection of crypto and AI is still in its very early stages. Although countless intelligent agents and tokens have emerged on the market, most projects seem to be just a numbers game, with teams trying to get as many “shots” as possible.

While AI is the technological revolution of our generation, its combination with crypto is seen more as a liquidity tool for early exposure to the AI market.

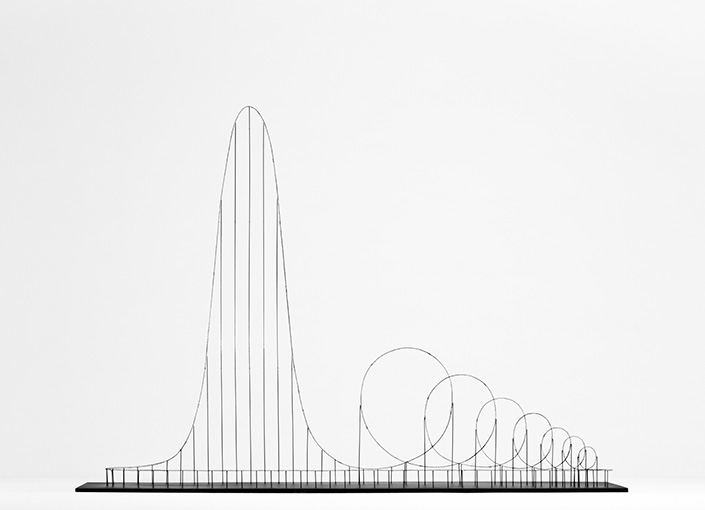

As a result, we have witnessed multiple cycles in this intersection where most narratives have experienced a similar “roller coaster” rise and fall.

How to break the hype cycle?

So, where will the next big opportunity for encryption and AI come from? What kind of applications or infrastructure can truly create value and find market fit?

This article will attempt to explore the main points of interest in this area through the following framework:

How can AI help the crypto industry?

How can the crypto industry give back to AI?

In particular, I’m particularly interested in the second point - the opportunities in decentralized AI, and will introduce some exciting projects:

1. How does AI help the crypto industry?

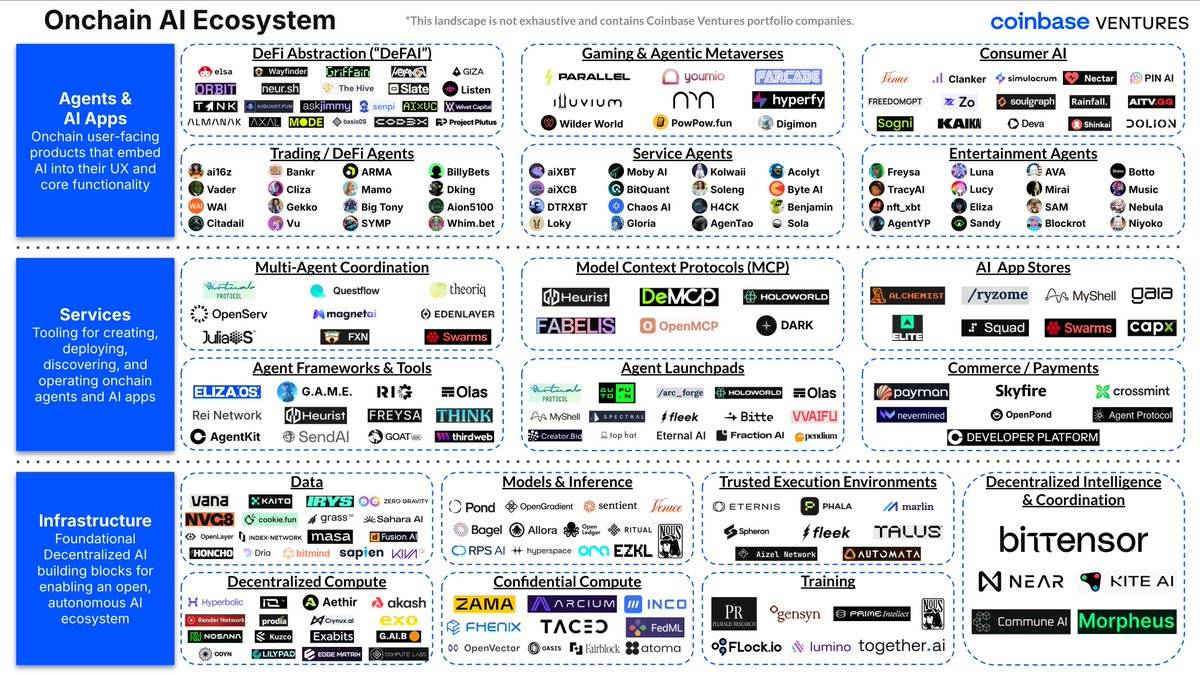

The following is a more comprehensive ecological map provided by CV:

https://x.com/cbventures/status/1923401975766355982/photo/1

While there are many verticals to explore in consumer AI, intelligent agent frameworks, and enablement platforms, AI is already impacting the crypto experience in three main ways:

1. Developer Tools

Similar to Web2, AI is accelerating the development of crypto projects through no-code and low-code platforms. Many of these applications have similar goals to traditional fields, such as Lovable.dev .

Teams like @poofnew and @tryoharaAI are helping non-technical developers get online quickly and iterate without deep knowledge of smart contracts. This not only shortens the time to market for crypto projects, but also lowers the barrier to entry for market savvy and creative people, even if they don’t have a technical background.

Additionally, other parts of the developer experience have been improved, such as smart contract testing and security: @AIWayfinder , @octane_security

2. User Experience

Although the crypto space has made significant progress in onboarding and wallet experiences (e.g. Bridge, Sphere Pay, Turnkey, Privy), the core crypto user experience (UX) has not changed substantially. Users still need to manually navigate complex blockchain browsers and perform multi-step transactions.

AI intelligent agents are changing this situation and becoming a new interaction layer:

Search and Discovery: Teams are racing to build tools like “Perplexity for blockchain.” These chat-based natural language interfaces allow users to easily find market information (alpha), understand smart contracts, and analyze on-chain behavior without having to delve into raw transaction data.

The bigger opportunity is that smart agents can become a gateway for users to discover new projects, revenue opportunities, and tokens. Similar to how Kaito helps projects gain more attention on its launch platform, agents can understand user behavior and proactively present content to users. This can not only create a sustainable business model, but also potentially make money through revenue sharing or affiliate fees.

Intent-based actions: Instead of clicking through multiple screens, users can simply express their intent (e.g., “convert $1000 of ETH into the highest-yielding stablecoin position”) and the agent can automatically execute complex, multi-step transactions.

Error Prevention: AI can also prevent common mistakes, such as entering the wrong transaction amount, purchasing scam tokens, or approving malicious contracts.

More on how Hey Anon automated DeFAI :

3. Trading tools and DeFi automation

Currently, many teams are racing to develop intelligent agents to help users obtain smarter trading signals, trade on their behalf, or optimize and manage strategies.

Revenue Optimization

Agents are able to automatically move funds between lending protocols, decentralized exchanges (DEX), and farming opportunities based on interest rate changes and risk profiles.

Trade Execution

AI is able to execute better strategies than human traders by processing market data faster, managing emotions, and following preset frameworks.

Portfolio Management

Agents are able to rebalance portfolios, manage risk exposure, and capture arbitrage opportunities across different chains and protocols.

If an agent can truly and consistently manage funds better than humans, it will be an order of magnitude improvement over existing DeFi AI agents. Current DeFi AI mainly helps users execute established intentions, and this will move towards fully automated fund management. However, user acceptance of this transition is similar to the promotion process of electric vehicles, and there is still a large trust gap before large-scale verification. But if successful, this technology has the potential to capture the greatest value in the field.

Who are the winners in this field?

While some standalone applications may have an advantage in distribution, it is more likely that existing protocols will directly integrate AI technology:

DEXs (decentralized exchanges) : enable smarter routing and fraud protection.

Lending agreement : automatically optimizes returns based on user risk profiles, and repays loans when the loan health factor falls below a certain standard, reducing liquidation risk.

Wallet : Evolving into an AI assistant that understands user intent.

Trading Platform : Provides AI-assisted tools to help users stick to their trading strategies.

Final Outlook

Crypto interfaces will evolve to incorporate conversational AI that can understand a user’s financial goals and execute them more efficiently than the user could on their own.

2. Encryption helps AI: The future of decentralized AI

In my opinion, the potential for crypto to help AI is far greater than the impact of AI on crypto. Teams working on decentralized AI are exploring some of the most fundamental and practical questions about the future of AI:

Can cutting-edge models be developed without relying on massive capital expenditures from centralized tech giants?

Is it possible to coordinate globally distributed computing resources to efficiently train models or generate data?

What would happen if humanitys most powerful technology was controlled by a few companies?

I highly recommend reading @yb_effect ’s article on Decentralized AI (DeAI) for a deeper dive into this space.

Just from the tip of the iceberg, the next wave of the intersection of crypto and AI may come from research-led academic AI teams. These teams mainly originate from the open source AI community, and they have a deep understanding of the practical significance and philosophical value of decentralized AI and believe that this is the best way to scale AI.

What are the current problems facing AI?

In 2017, the landmark paper Attention Is All You Need proposed the Transformer architecture, which solved the key problems in the field of deep learning for decades. Since its popularization by ChatGPT in 2019, the Transformer architecture has become the basis of most large language models (LLMs) and triggered a wave of computing power competition.

Since then, the computing power required for AI training has increased fourfold every year. This has led to a high degree of centralization in AI development, as pre-training relies on more powerful GPUs, which are only in the hands of the largest tech giants.

Centralized AI is a problem from an ideological perspective, because humanity’s most powerful tool could be controlled or withdrawn at any time by its funders. So even if open source teams can’t directly compete with the pace of progress in centralized labs, it’s still important to try to challenge that dynamic.

Cryptography provides the foundation for building an open model of economic coordination. But before we can achieve this goal, we need to answer a question: What practical problems can decentralized AI solve in addition to satisfying ideals? Why is it so important to get people working together?

Fortunately, the teams working on this space are very pragmatic. Open source represents the core idea of how technology scales: by collaborating in small groups , each optimizing its own local maximum and building on it, they eventually reach a global maximum faster than centralized approaches that are limited by their own scale and institutional inertia.

At the same time, especially in the field of AI, open source is also a necessary condition for creating intelligence - this intelligence is not moralistic, but can adapt to the different roles and personalities that individuals give it.

In practice, open source can open the door to innovation that addresses some very real infrastructure constraints.

The shortage of computing resources

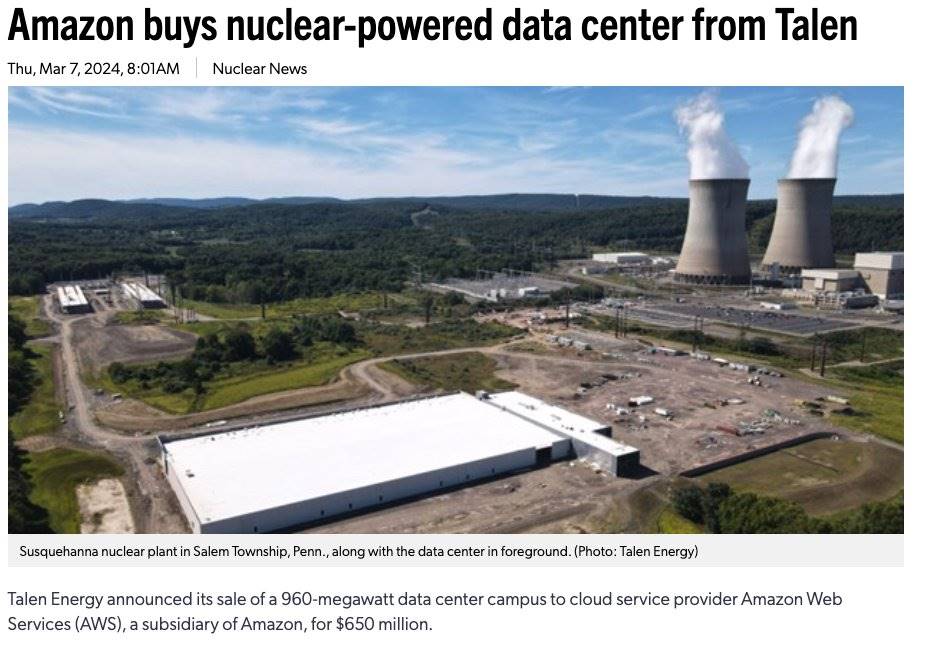

Training AI models already requires a massive energy infrastructure. There are multiple projects underway to build data centers of 1 to 5 GW. However, continued scaling of cutting-edge models will require more energy than a single data center can provide, even to the same level as an entire city. The problem is not only the energy output, but also the physical limitations of a single data center.

Even beyond the pre-training phase of these cutting-edge models, the cost of the inference phase will increase significantly due to the emergence of new inference models and DeepSeek. As the @fortytwonetwork team said:

“Unlike traditional large language models (LLMs), inference models prioritize generating smarter responses by allocating more processing time. However, this shift comes with a trade-off: the same computing resources can only process fewer requests. To achieve these significant improvements, the model needs more “thinking” time, which further exacerbates the scarcity of computing resources.

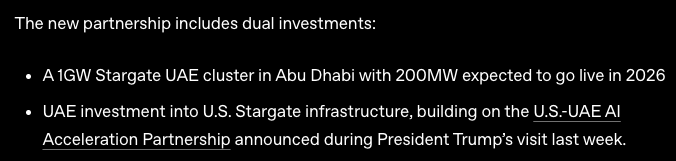

The shortage of computing resources is already evident. For example, OpenAI limits API calls to 10,000 per minute, which effectively limits AI applications to serving only about 3,000 users at a time. Even ambitious projects like Stargate—a $500 billion AI infrastructure initiative recently announced by President Trump—may only provide temporary relief.

According to Jevons Paradox, increased efficiency often leads to increased resource consumption as demand rises. As AI models become more powerful and efficient, computing demands are likely to surge due to new use cases and wider adoption.”

So where does encryption come from, and how can blockchain really and meaningfully help AI search and development?

Crypto technology offers a fundamentally different approach: globally distributed + decentralized training and economic coordination. Instead of building new data centers, it is possible to leverage the millions of existing GPUs — including gaming rigs, crypto mining rigs, and enterprise servers — that sit idle most of the time. Similarly, blockchains can enable decentralized inference by leveraging idle computing resources on consumer devices.

One of the main issues facing distributed training is latency. In addition to encryption elements, teams such as Prime Intellect and Nous are working on technical breakthroughs that reduce the need for GPU communication:

DiLoCo (Prime Intellect): Prime Intellect’s implementation reduces communication requirements by 500x, enables cross-continental training, and achieves 90-95% compute utilization.

DisTrO/DeMo (Nous Research): Nous Researchs family of optimizers achieves 857x reduction in communication requirements through discrete cosine transform compression technology.

However, traditional coordination mechanisms cannot solve the trust challenges inherent in decentralized AI training, and the inherent characteristics of blockchain may find product-market fit (PMF) here:

Verification and fault tolerance: Decentralized training faces the challenge of participants submitting malicious or erroneous calculations. Cryptography provides cryptographic verification schemes (such as Prime Intellect’s TOPLOC) and economic penalty mechanisms to prevent bad behavior.

Permissionless participation: Unlike traditional distributed computing projects that require an approval process, cryptography allows for true permissionless contributions. Anyone with idle computing resources can immediately join and start earning revenue, maximizing the available resource pool.

Economic Incentive Alignment: Blockchain-based incentive mechanisms align the interests of individual GPU owners with collective training goals, making previously idle computing resources economically productive.

Given this, how can teams in the decentralized AI stack solve the scaling problem of AI and use blockchain? What are the proof points?

1. Prime Intellect : Distributed and decentralized training

DiLoCo : Reduces communications requirements by 500 times, making cross-continental training possible.

PCCL : Handles dynamic membership, node failures, and achieves cross-continental communication speeds of 45 Gbit/s.

The 32 billion parameter model is currently being trained across globally distributed worker nodes.

Achieved 90-95% compute utilization in production environments.

Results: Successfully trained INTELLECT-1 (10 billion parameters) and INTELLECT-2 (32 billion parameters) , enabling large-scale model training across continents.

2. Nous Research : Decentralized training and communication optimization

DisTrO/DeMo : A 857-fold reduction in communication requirements was achieved through Discrete Cosine Transform technology.

Psyche Network : Utilizes blockchain coordination mechanisms to provide fault tolerance and incentive mechanisms to activate computing resources.

One of the largest pre-training exercises on the Internet was completed, training Consilience (40 billion parameters) .

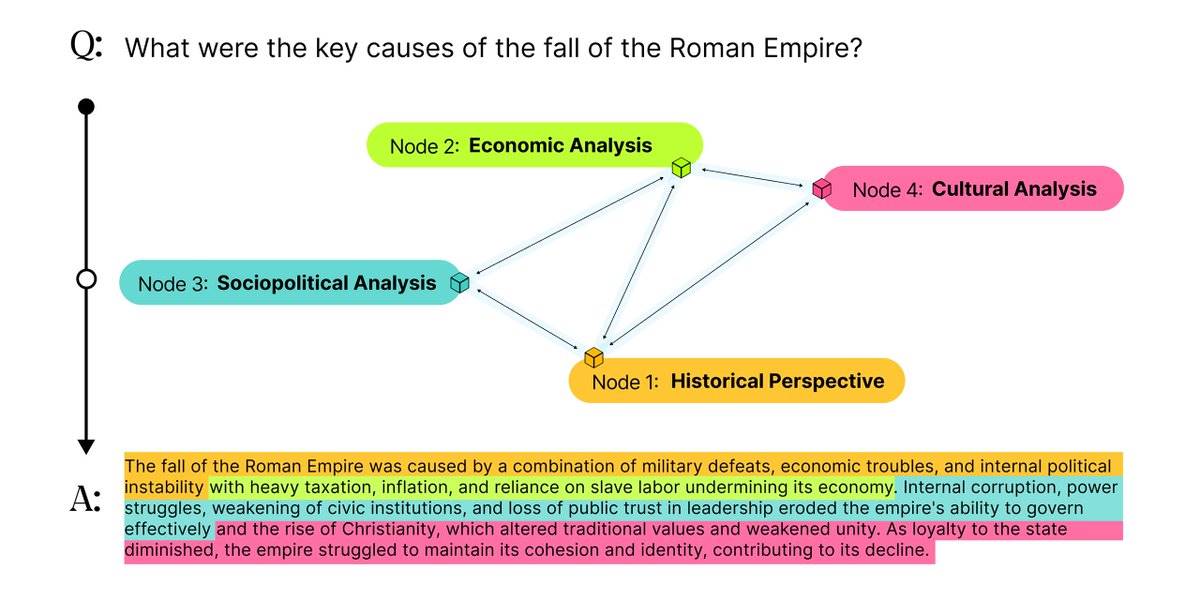

3. Pluralis: Protocol Learning and Model Parallelism

Pluralis uses a different approach from traditional open source AI, called Protocol Learning . Unlike the data parallel approach used by other decentralized training projects (such as Prime Intellect and Nous), Pluralis believes that data parallelism has economic flaws and that relying solely on the pooling of computing resources is not enough to meet the needs of training cutting-edge models. For example, Llama 3 (400 billion parameters) requires 16,000 80GB H100 GPUs for training.

Source: Link

The core idea of Protocol Learning is to introduce a real value capture mechanism for model trainers, thereby pooling the computational resources required for large-scale training. This mechanism is achieved by allocating partial model ownership proportional to training contributions. In this architecture, neural networks are trained in a collaborative manner, but the complete set of weights can never be extracted by any single participant (called Protocol Models ). In this setting, the computational cost required for any participant to obtain the complete model weights will exceed the cost of retraining the model.

The specific operation mode of protocol learning is as follows:

Model fragmentation: Each participant holds only partial fragments (shards) of the model, rather than the full weight.

Collaborative training: The training process requires passing activations between participants without letting anyone see the complete model.

Inference Credentials: Inference requires credentials, which are distributed according to the participants’ training contributions. In this way, contributors can earn benefits through the actual use of the model.

The significance of protocol learning is to transform the model into an economic resource or commodity, enabling it to be fully financialized. In this way, protocol learning is expected to achieve the computational scale required to support truly competitive training tasks. Pluralis combines the sustainability of closed-source development (such as the stable income from the release of closed-source models) with the advantages of open-source collaboration, providing new possibilities for the development of decentralized AI.

Fortytwo: Decentralized Swarm Reasoning

Source: Link

While other teams focus on distributed and decentralized training challenges, Fortytwo focuses on distributed reasoning, solving the problem of scarce computing resources in the reasoning phase through swarm intelligence.

Fortytwo addresses the growing computational scarcity problem surrounding inference by networking specialized small language models to take advantage of idle computational power on consumer hardware, such as the M2-powered MacBook Air.

Fortytwo networks multiple small language models, where these nodes collaborate to evaluate each others contributions, amplifying the networks effectiveness through peer-to-peer evaluation. The resulting response is based on the most valuable contribution in the network, supporting reasoning efficiency.

Interestingly, Fortytwo s inference network approach can complement distributed/decentralized training projects. Imagine a future scenario: the small language models (SLMs) running on Fortytwo nodes may be models trained through Prime Intellect , Nous , or Pluralis . These distributed training projects work together to create open source foundation models, which are then fine-tuned for specific domains and finally coordinated through Fortytwos network to complete the inference task.

Summarize

The next big opportunity for crypto and AI is not another speculative token, but the infrastructure that can truly drive AI development. Currently, the expansion bottleneck faced by centralized AI corresponds to the core advantages of the crypto field in global resource coordination and economic incentive alignment.

Decentralized AI opens up a parallel universe that not only expands the possibilities of AI architecture, but also explores more potential technological boundaries by combining experimental freedom with real resources.