Original author: Jiaheng Zhang

As artificial intelligence (AI) is increasingly used in key areas such as healthcare, finance, and autonomous driving, ensuring the reliability, transparency, and security of the machine learning (ML) reasoning process is becoming more important than ever.

However, traditional machine learning services often run like a black box, where users can only see the results and it is difficult to verify the process. This opacity makes model services vulnerable to risks:

The model was stolen,

The inference results are maliciously tampered with,

User data faces the risk of privacy leakage.

ZKML (Zero-Knowledge Machine Learning) provides a new cryptographic solution to this challenge. It relies on zero-knowledge proof (ZKPs) technology to give machine learning models the ability to be cryptographically verified: proving that a calculation has been performed correctly without revealing sensitive information.

In other words, ZKPs allow service providers to prove to users that:

“The inference results you get are indeed those I run using the trained model—but I will not disclose any model parameters.”

This means that users can trust the authenticity of inference results, while the structure and parameters of the model (often high-value assets) always remain private.

zkPyTorch

Polyhedra Network has launched zkPyTorch, a revolutionary compiler built specifically for zero-knowledge machine learning (ZKML), which aims to bridge the last mile between mainstream AI frameworks and ZK technology.

zkPyTorch deeply integrates PyTorchs powerful machine learning capabilities with the cutting-edge zero-knowledge proof engine. AI developers can build verifiable AI applications in a familiar environment without changing their programming habits or learning a new ZK language.

This compiler can automatically translate high-level model operations (such as convolution, matrix multiplication, ReLU, softmax and attention mechanism) into cryptographically verifiable ZKP circuits, and combined with Polyhedras self-developed ZKML optimization suite, it can intelligently compress and accelerate mainstream reasoning paths to ensure both circuit correctness and computational efficiency.

Building key infrastructure for a trusted AI ecosystem

Todays machine learning ecosystem is facing multiple challenges such as data security, computational verifiability, and model transparency. Especially in key industries such as healthcare, finance, and autonomous driving, AI models not only involve a large amount of sensitive personal information, but also carry high-value intellectual property and core business secrets.

Zero-knowledge machine learning (ZKML) came into being and became an important breakthrough in solving this dilemma. Through zero-knowledge proof (ZKP) technology, ZKML can complete the integrity verification of model reasoning without leaking model parameters or input data - both protecting privacy and ensuring credibility.

But in reality, ZKML development often has a very high threshold and requires a deep background in cryptography, which is far beyond the ability of traditional AI engineers to easily master.

This is exactly what zkPyTorch does. It builds a bridge between PyTorch and the ZKP engine, allowing developers to build privacy-preserving and verifiable AI systems with familiar code without having to relearn complex cryptographic languages.

Through zkPyTorch, Polyhedra Network is significantly reducing the technical barriers of ZKML, promoting scalable and trustworthy AI applications into the mainstream, and reconstructing a new paradigm for AI security and privacy.

zkPyTorch Workflow

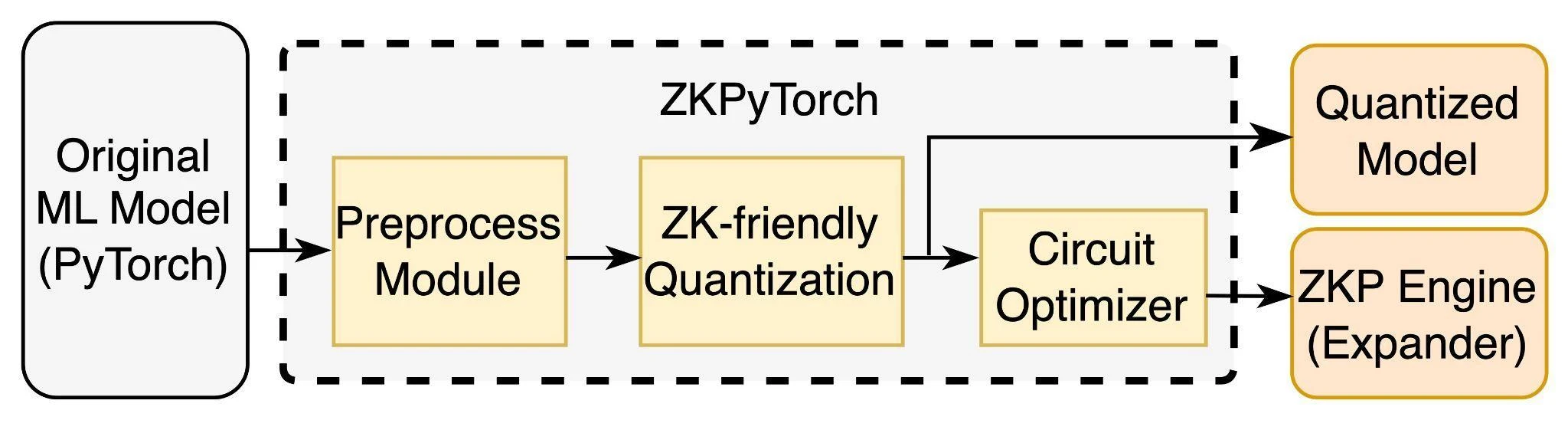

Figure 1: Overview of the overall architecture of ZKPyTorch

As shown in Figure 1, zkPyTorch automatically converts the standard PyTorch model into a ZKP (zero-knowledge proof) compatible circuit through three carefully designed modules: preprocessing module, zero-knowledge friendly quantization module, and circuit optimization module.

This process does not require developers to master any cryptographic circuits or special syntax: developers only need to use standard PyTorch to write models, and zkPyTorch can convert them into circuits that can be recognized by zero-knowledge proof engines such as Expander, and generate corresponding ZK proofs. This highly modular design greatly reduces the development threshold of ZKML, allowing AI developers to easily build efficient, secure, and verifiable machine learning applications without switching languages or learning cryptography.

Module 1: Model Preprocessing

In the first stage, zkPyTorch converts the PyTorch model into a structured computational graph using the Open Neural Network Exchange format (ONNX). ONNX is a widely used intermediate representation standard that can uniformly represent various complex machine learning operations. Through this preprocessing step, zkPyTorch can clarify the model structure and disassemble the core computational process, laying a solid foundation for the subsequent generation of zero-knowledge proof circuits.

Module 2: ZKP-friendly quantification

The quantization module is a key part of the ZKML system. Traditional machine learning models rely on floating-point operations, while the ZKP environment is more suitable for integer operations in finite fields. zkPyTorch uses an integer quantization scheme optimized for finite fields to accurately map floating-point calculations to integer calculations, while converting nonlinear operations that are not conducive to ZKP (such as ReLU, Softmax) into efficient lookup table forms.

This strategy not only significantly reduces circuit complexity, but also improves the verifiability and operational efficiency of the entire system while ensuring model accuracy.

Module 3: Hierarchical Circuit Optimization

zkPyTorch uses a multi-level strategy for circuit optimization, including:

Batch optimization is specially designed for serialized computing. It greatly reduces computing complexity and resource consumption by processing multiple reasoning steps at one time. It is especially suitable for verification scenarios of large language models such as Transformer.

Primitive operation acceleration combines fast Fourier transform (FFT) convolution with lookup table technology to effectively improve the circuit execution speed of basic operations such as convolution and Softmax, fundamentally enhancing the overall computing efficiency.

Parallel circuit execution fully leverages the computing power of multi-core CPUs and GPUs, splitting heavy-load calculations such as matrix multiplication into multiple subtasks for parallel execution, significantly improving the speed and scalability of zero-knowledge proof generation.

In-depth technical discussion

Directed Acyclic Graph (DAG)

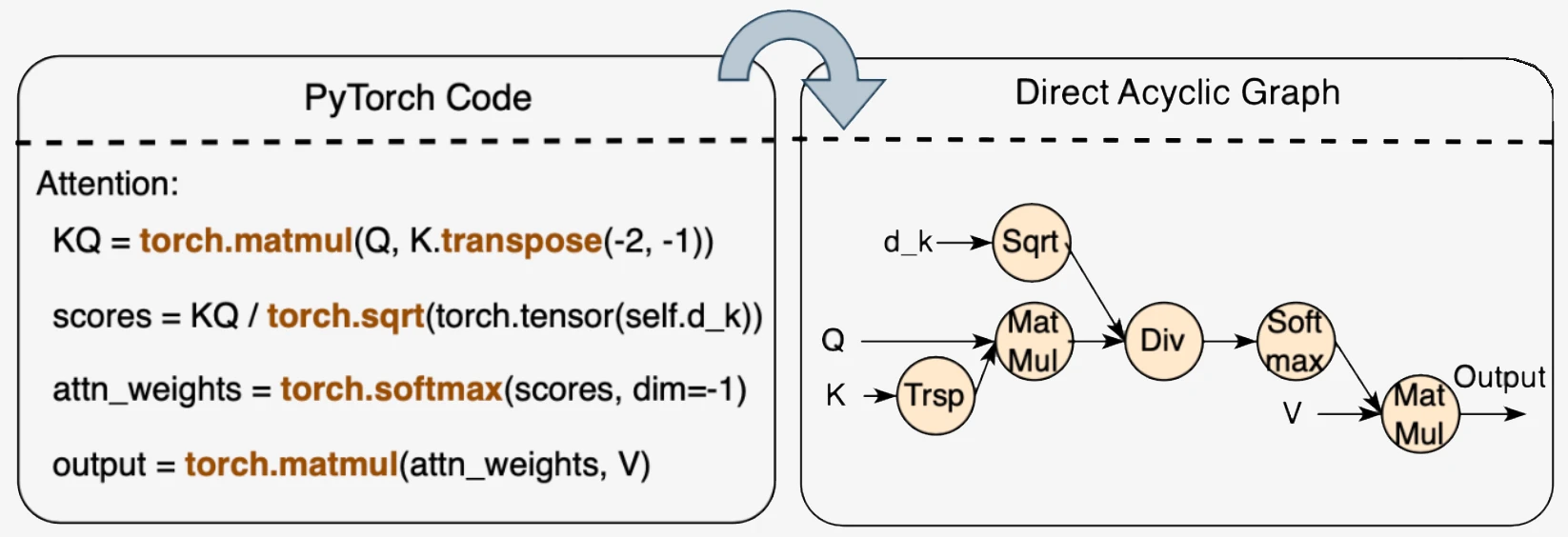

zkPyTorch uses a directed acyclic graph (DAG) to manage the computational process of machine learning. The DAG structure can systematically capture complex model dependencies, as shown in Figure 2. Each node in the graph represents a specific operation (such as matrix transposition, matrix multiplication, division, and Softmax), while the edges accurately describe the data flow between these operations.

This clear and structured representation not only greatly facilitates the debugging process, but also helps in-depth performance optimization. The acyclic nature of DAG avoids cyclic dependencies and ensures efficient and controllable execution of the computation sequence, which is crucial for optimizing the generation of zero-knowledge proof circuits.

In addition, DAG enables zkPyTorch to efficiently process complex model architectures such as Transformer and Residual Network (ResNet). These models usually have multi-path, nonlinear and complex data flows. The design of DAG just meets their computing needs, ensuring the accuracy and efficiency of model reasoning.

Figure 2: Example of a machine learning model represented as a directed acyclic graph (DAG)

Advanced Quantization Techniques

In zkPyTorch, advanced quantization techniques are a key step in converting floating-point computations into integer operations suitable for efficient finite field arithmetic in zero-knowledge proof (ZKP) systems. zkPyTorch uses a static integer quantization method that is carefully designed to balance computational efficiency and model accuracy, ensuring that it is both fast and accurate when generating proofs.

This quantization process involves strict calibration to accurately determine the optimal quantization scale to effectively represent floating-point numbers and avoid overflow and significant loss of precision. In response to the unique nonlinear computing challenges of ZKP (such as Softmax and layer normalization), zkPyTorch innovatively transforms these complex functions into efficient table lookup operations.

This strategy not only greatly improves the efficiency of proof generation, but also ensures that the generated proof results are completely consistent with the output of the high-precision quantization model, taking into account both performance and credibility, and promoting the practical application of verifiable machine learning.

Multi-level circuit optimization strategy

zkPyTorch uses a highly sophisticated multi-level circuit optimization system to ensure the ultimate performance of zero-knowledge reasoning in terms of efficiency and scalability from multiple dimensions:

Batch Processing Optimization

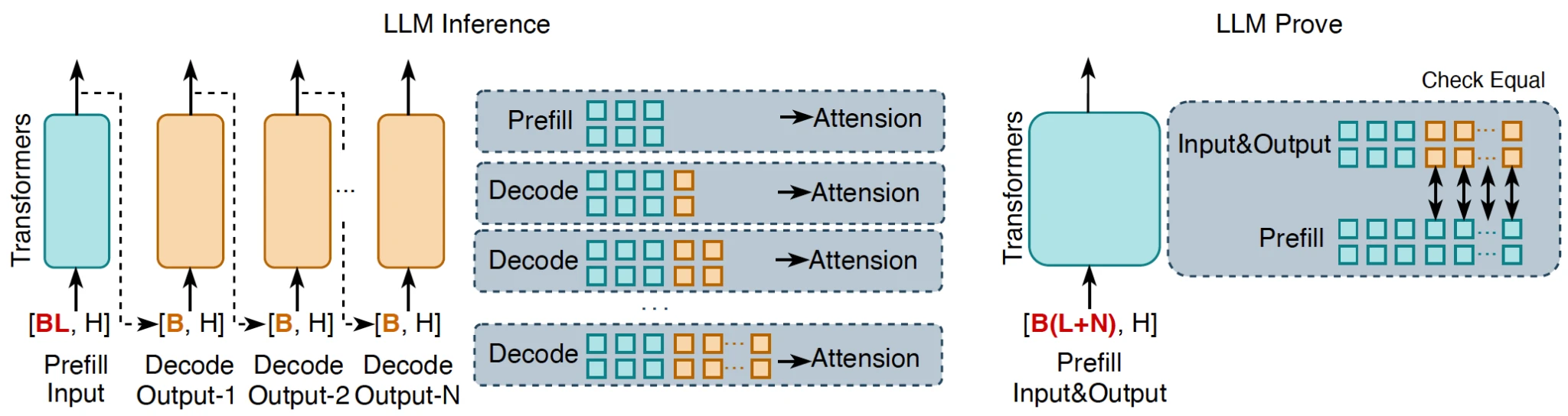

By packaging multiple reasoning tasks into batches, the overall computational complexity is significantly reduced, which is especially suitable for sequential operation scenarios in language models such as Transformer. As shown in Figure 3, the traditional large language model (LLM) reasoning process runs in a token-by-token generation manner, while zkPyTorchs innovative method aggregates all input and output tokens into a single prompt process for verification. This processing method can confirm whether the overall reasoning of the LLM is correct at one time, while ensuring that each output token is consistent with the standard LLM reasoning.

In LLM reasoning, the correctness of the KV cache (key-value cache) mechanism is the key to ensuring the credibility of the reasoning output. If the reasoning logic of the model is wrong, even if the cache is used, it is impossible to restore the results consistent with the standard decoding process. zkPyTorch ensures that every output in the zero-knowledge proof has verifiable certainty and integrity by accurately reproducing this process.

Figure 3: Batch validation of Large Scale Language Models (LLMs) computation, where L represents the input sequence length, N represents the output sequence length, and H represents the hidden layer dimension of the model.

Optimized Primitive Operations

zkPyTorch has deeply optimized the underlying machine learning primitives, greatly improving circuit efficiency. For example, convolution operations have always been computationally intensive tasks. zkPyTorch uses an optimization method based on fast Fourier transform (FFT) to convert the convolution originally performed in the spatial domain into a multiplication operation in the frequency domain, significantly reducing the computational cost. At the same time, for nonlinear functions such as ReLU and softmax, the system uses a pre-calculated lookup table to avoid ZKP-unfriendly nonlinear calculations, greatly improving the operating efficiency of the inference circuit.

Parallel Circuit Execution

zkPyTorch automatically compiles complex ML operations into parallel circuits, fully unleashing the hardware potential of multi-core CPUs/GPUs and enabling large-scale parallel proof generation. For example, when performing tensor multiplication, zkPyTorch automatically splits the computational task into multiple independent subtasks and distributes them to multiple processing units for concurrent execution. This parallelization strategy not only significantly improves circuit execution throughput, but also makes efficient verification of large models a reality, opening up a new dimension for scalable ZKML.

Comprehensive performance testing: Double breakthrough in performance and accuracy

zkPyTorch has passed rigorous benchmark tests and demonstrated excellent performance and practical usability in multiple mainstream machine learning models:

VGG-16 model testing

On the CIFAR-10 dataset, zkPyTorch can complete the VGG-16 proof generation for a single image in just 6.3 seconds, and the accuracy is almost the same as traditional floating-point calculations. This indicates that zkML has practical capabilities in classic tasks such as image recognition.

Llama-3 Model Testing

For the Llama-3 large language model with a scale of up to 8 billion parameters, zkPyTorch achieved efficient proof generation of about 150 seconds per token. Even more impressive is that its output maintains a cosine similarity of 99.32% compared with the original model, while ensuring high credibility and still taking into account the semantic consistency of the model output.

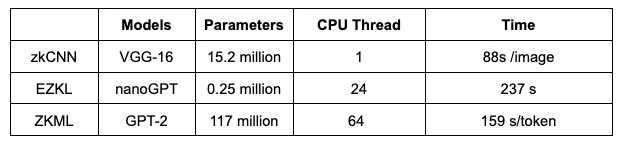

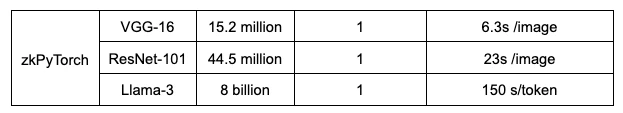

Table 1: Performance of various ZKP schemes in convolutional neural networks and transformer networks

Wide range of real-world application scenarios

Verifiable Machine Learning as a Service (Verifiable MLaaS)

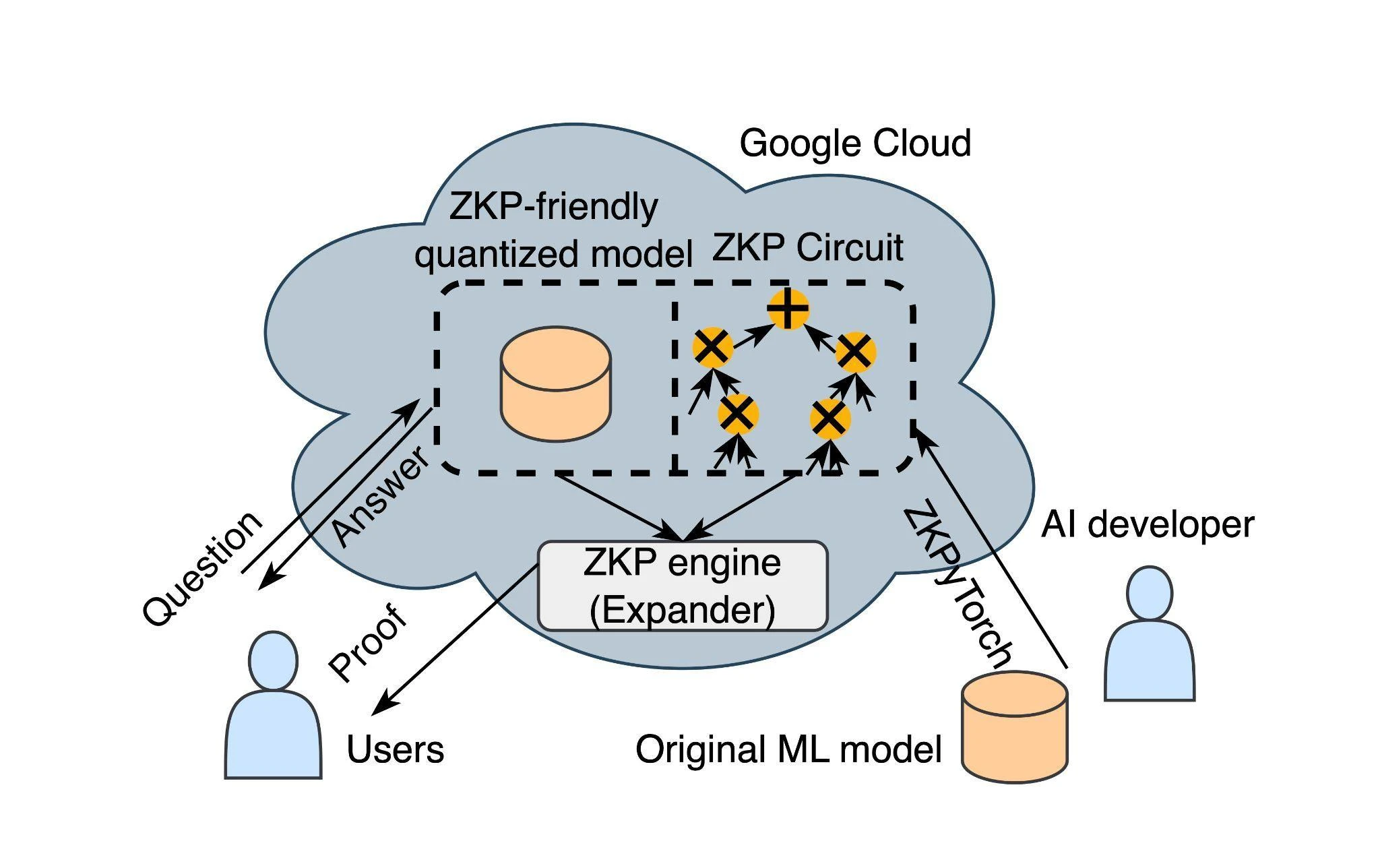

As the value of machine learning models continues to rise, more and more AI developers choose to deploy their self-developed models to the cloud and provide MLaaS (Machine-Learning-as-a-Service) services. However, in reality, it is often difficult for users to verify whether the inference results are true and reliable; and model providers also want to protect core assets such as model structure and parameters to avoid theft or abuse.

zkPyTorch was created to resolve this contradiction: it enables cloud-based AI services to have native “zero-knowledge verification capabilities” and make the reasoning results verifiable at the encryption level.

As shown in Figure 4, developers can directly connect large models such as Llama-3 to zkPyTorch to build a trusted MLaaS system with zero-knowledge proof capabilities. By seamlessly integrating with the underlying ZKP engine, zkPyTorch can automatically generate proofs without exposing model details, verifying whether each reasoning is executed correctly, and thus establish a truly credible interactive trust foundation for model providers and users.

Figure 4: Application scenarios of zkPyTorch in Verifiable Machine Learning as a Service (Verifiable MLaaS).

Safeguarding model valuation

zkPyTorch provides a secure and verifiable AI model evaluation mechanism, allowing stakeholders to carefully evaluate key performance indicators without exposing model details. This zero leakage valuation method establishes a new trust standard for AI models, while improving the efficiency of commercial transactions and protecting the intellectual property security of developers. It not only improves the visibility of model value, but also brings higher transparency and fairness to the entire AI industry.

Deep integration with EXPchain blockchain

zkPyTorch natively integrates the EXPchain blockchain network independently developed by Polyhedra Network to jointly build a trusted decentralized AI infrastructure. This integration provides a highly optimized path for smart contract calls and on-chain verification, allowing AI reasoning results to be cryptographically verified and permanently stored on the blockchain.

With the collaboration of zkPyTorch and EXPchain, developers can build end-to-end verifiable AI applications, from model deployment, inference calculation to on-chain verification, truly realizing a transparent, trustworthy and auditable AI computing process, and providing underlying support for the next generation of blockchain + AI applications.

Future roadmap and continuous innovation

Polyhedra will continue to advance the evolution of zkPyTorch, focusing on the following areas:

Open source and community building

Gradually open source the core components of zkPyTorch to inspire global developer participation and promote collaborative innovation and ecological prosperity in the field of zero-knowledge machine learning.

Expanding model and framework compatibility

Expand support for mainstream machine learning models and frameworks, further enhance the adaptability and versatility of zkPyTorch, and enable it to be flexibly embedded in various AI workflows.

Development Tools and SDK Build

Launch a comprehensive development toolchain and software development kit (SDK) to simplify the integration process and accelerate the deployment and application of zkPyTorch in actual business scenarios.

Conclusion

zkPyTorch is an important milestone towards the future of trusted AI. By deeply integrating the mature PyTorch framework with cutting-edge zero-knowledge proof technology, zkPyTorch not only significantly improves the security and verifiability of machine learning, but also reshapes the deployment method and trust boundary of AI applications.

Polyhedra will continue to deepen innovation in the field of Secure AI, promote machine learning to higher standards in privacy protection, result verifiability and model compliance, and help build transparent, trustworthy and scalable intelligent systems.

Stay tuned as we continue to release the latest developments and witness how zkPyTorch will reshape the future of the secure intelligence era.